Objective of this project:

- Develop a flexible hardware FMA unit capable of handling various floating-point (FP) formats — both standard (Half, Single) and emerging (E4M3, E5M2, BFloat16, DLFloat16, TF32).

- Enable

Objective of this project:

- Develop a flexible hardware FMA unit capable of handling various floating-point (FP) formats — both standard (Half, Single) and emerging (E4M3, E5M2, BFloat16, DLFloat16, TF32).

- Enable configurability in exponent and mantissa bit widths (from 8- to 32-bit FP numbers) to adapt precision and dynamic range for specific AI applications.

- Support both multiple and mixed-precision operations:

- Multiple precision: Perform several independent FMA operations in parallel (e.g., 4×8-bit, 2×16-bit, or 1×32-bit FMAs).

- Mixed precision: Accumulate several low-precision dot products into higher-precision results to enhance numerical stability and prevent overflow/underflow.

- Improve computational efficiency by reducing rounding steps and resource duplication, thus minimizing hardware area, delay, and power.

- Introduce an innovative accumulation method for dot products and aligned addends that maintains accuracy and reduces rounding errors.

- Achieve better trade-offs between hardware complexity, performance, and accuracy compared to existing FMA architectures.

in exponent and mantissa bit widths (from 8- to 32-bit FP numbers) to adapt precision and dynamic range for specific AI applications.

- Support both multiple and mixed-precision operations:

- Multiple precision: Perform several independent FMA operations in parallel (e.g., 4×8-bit, 2×16-bit, or 1×32-bit FMAs).

- Mixed precision: Accumulate several low-precision dot products into higher-precision results to enhance numerical stability and prevent overflow/underflow.

- Improve computational efficiency by reducing rounding steps and resource duplication, thus minimizing hardware area, delay, and power.

- Introduce an innovative accumulation method for dot products and aligned addends that maintains accuracy and reduces rounding errors.

- Achieve better trade-offs between hardware complexity, performance, and accuracy compared to existing FMA architectures.

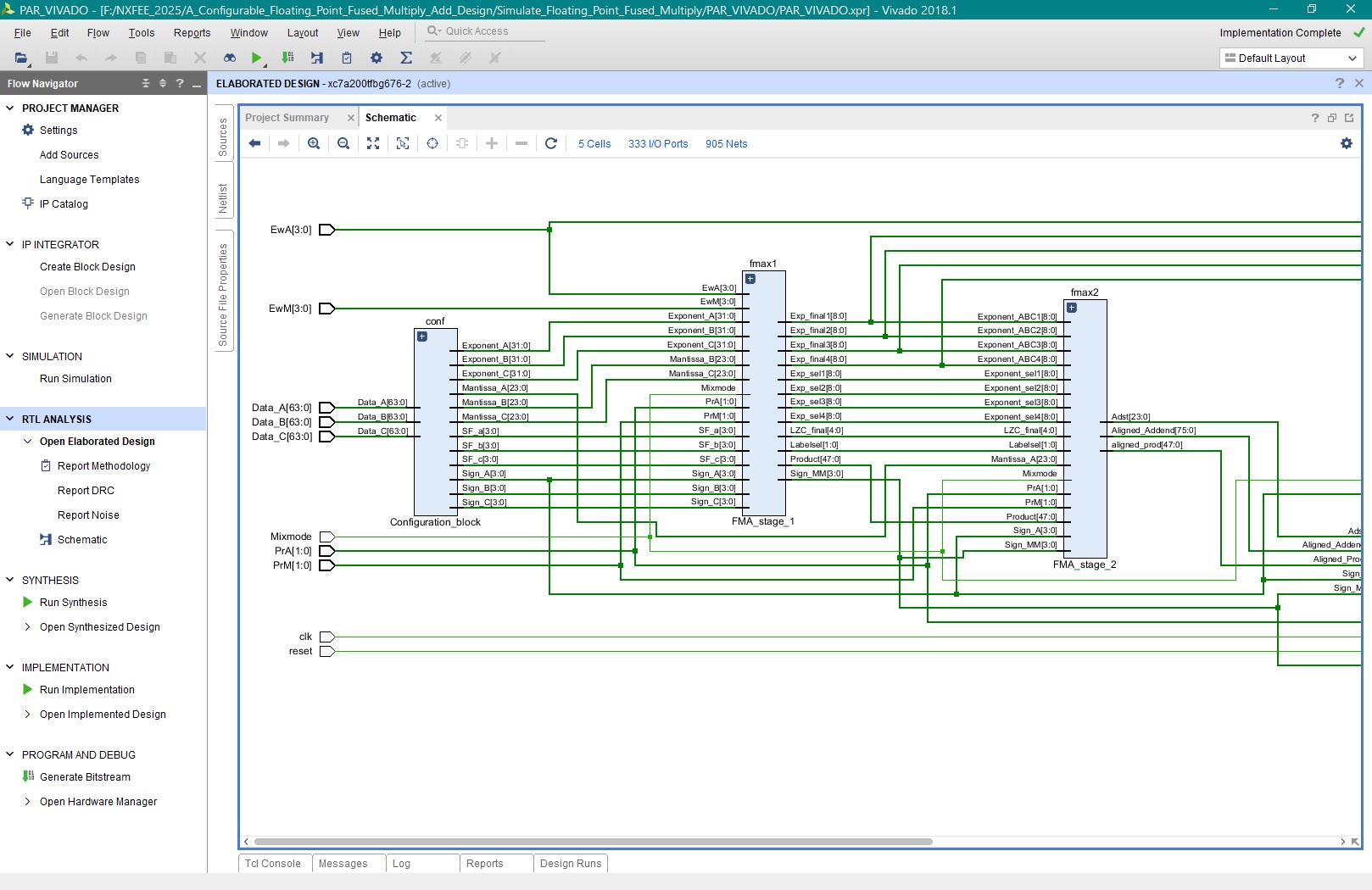

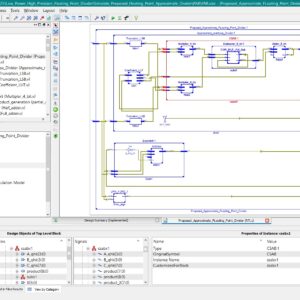

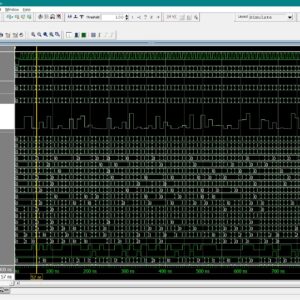

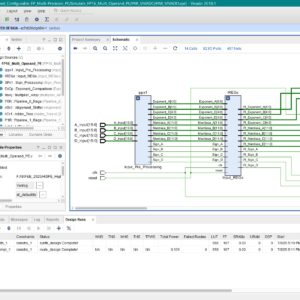

Software Implementation:

- Modelsim & Vivado

” Thanks for Visit this project Pages – Buy It Soon “

A Configurable Floating-Point Fused Multiply-Add Design with Mixed Precision for AI Accelerators

Terms & Conditions:

- Customer are advice to watch the project video file output, before the payment to test the requirement, correction will be applicable.

- After payment, if any correction in the Project is accepted, but requirement changes is applicable with updated charges based upon the requirement.

- After payment the student having doubts, correction, software error, hardware errors, coding doubts are accepted.

- Online support will not be given more than 3 times.

- On first time explanations we can provide completely with video file support, other 2 we can provide doubt clarifications only.

- If any Issue on Software license / System Error we can support and rectify that within end of the day.

- Extra Charges For duplicate bill copy. Bill must be paid in full, No part payment will be accepted.

- After payment, to must send the payment receipt to our email id.

- Powered by NXFEE INNOVATION, Pondicherry.

Payment Method :

- Pay Add to Cart Method on this Page

- Deposit Cash/Cheque on our a/c.

- Pay Google Pay/Phone Pay : +91 9789443203

- Send Cheque through courier

- Visit our office directly

International Payment Method :

- Pay using Paypal : Click here to get NXFEE-PayPal link

Bank Accounts

HDFC BANK ACCOUNT:

- NXFEE INNOVATION,

HDFC BANK, MAIN BRANCH, PONDICHERRY-605004.

INDIA,

ACC NO. 50200090465140,

IFSC CODE: HDFC0000407.