Proposed Title :

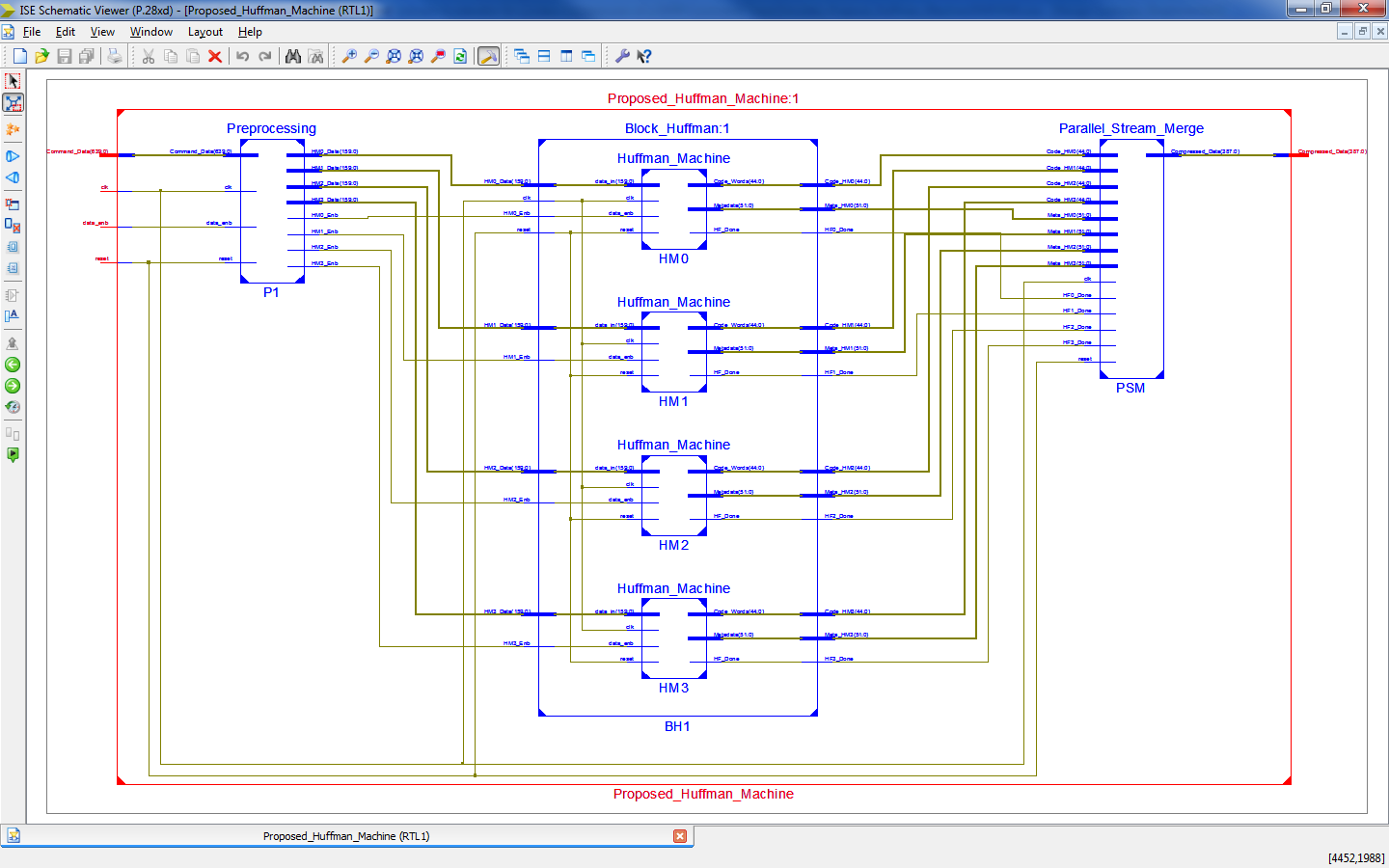

FPGA Implementation of High Throughput Lossless Data Compression technique using Multi-Machine Huffman Encoding.

Improvement of this Project:

To Develop this design of High Throughput Hardware Accelerator for Lossless compression at 640 Data Bits, and a Huffman Machine will take 160 Data Bits with compared all the parameters in terms of area, delay and power.

Software implementation:

- Modelsim

- Xilinx 14.2

Proposed System:

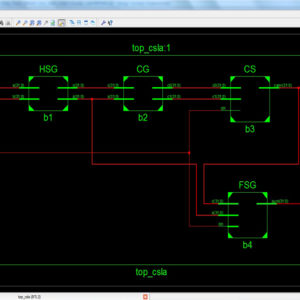

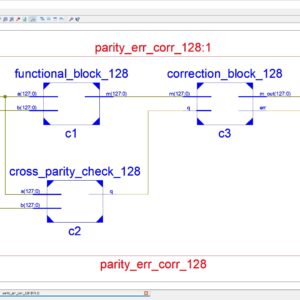

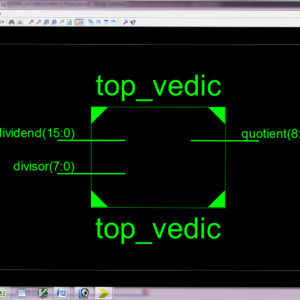

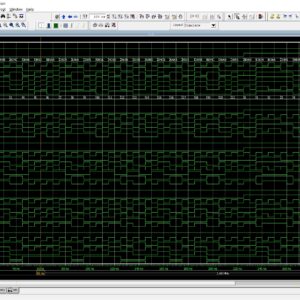

In a recent technology of digital network will transfer and receive the data with more complexity due to number of data bits and number of memory operations, thus it will take more data losses and low throughputs. Therefore this proposed work of this paper present lossless data compression with less memory architecture using Huffman compression technique. In this case here, Huffman machine will present lossless and less memory configuration and its support multi bit operations on data compression. Here, this technique will present input as 640 Data Bits and compressed output as 388 Data bits using variable length with block Huffman encoding method. The internal architecture of Huffman machine will present input as 160 Data Bits and compressed output as 45 Code words with 52 Metadata. Finally this work will present in Verilog HDL and synthesized in Vertex FPGA and get the results of area, delay and power.

” Thanks for Visit this project Pages – Buy It Soon “

A High-Throughput Hardware Accelerator for Lossless Compression of a DDR4 Command Trace

“Buy VLSI Projects On On-Line”

Terms & Conditions:

- Customer are advice to watch the project video file output, before the payment to test the requirement, correction will be applicable.

- After payment, if any correction in the Project is accepted, but requirement changes is applicable with updated charges based upon the requirement.

- After payment the student having doubts, correction, software error, hardware errors, coding doubts are accepted.

- Online support will not be given more than 3 times.

- On first time explanations we can provide completely with video file support, other 2 we can provide doubt clarifications only.

- If any Issue on Software license / System Error we can support and rectify that within end of the day.

- Extra Charges For duplicate bill copy. Bill must be paid in full, No part payment will be accepted.

- After payment, to must send the payment receipt to our email id.

- Powered by NXFEE INNOVATION, Pondicherry.

Payment Method :

- Pay Add to Cart Method on this Page

- Deposit Cash/Cheque on our a/c.

- Pay Google Pay/Phone Pay : +91 9789443203

- Send Cheque through courier

- Visit our office directly

- Pay using Paypal : Click here to get NXFEE-PayPal link